Tutorial: Exporting updated tickets to a CSV file

This tutorial shows you how to write two Python scripts to get ticket data, then export and format the data to a CSV file.

Unlike exporting ticket view information, which retrieves tickets based on specific criteria, this tutorial uses the Zendesk Incremental API to fetch all tickets updated since a specific timestamp.

The first example exports Zendesk tickets incrementally starting from a specified date and saves the retrieved ticket data to a CSV file.

The second example retrieves Zendesk tickets updated since a specified date and includes user, group, and organization information. This provides a more detailed view of your tickets by including requester and assignee details.

You don't have to use the API to export tickets to a CSV file. You can use the export view feature in the Zendesk admin interface. See Exporting a view to a CSV file.

Disclaimer: Zendesk provides this article for instructional purposes only. Zendesk does not support or guarantee the code. Zendesk also can't provide support for third-party technologies such as Postman or Python

What you need

- Admin role in a Zendesk account

- Python

- requests library

- Environment variables set for

ZENDESK_API_TOKEN,ZENDESK_USER_EMAIL, andZENDESK_SUBDOMAINfor authentication - API token for your Zendesk account

Exporting Zendesk tickets

In this example, you'll export Zendesk tickets incrementally starting from a specified date and save the retrieved ticket data to a CSV file. The code handles API authentication, and pagination to retrieve all tickets.

If the API responds with a 429 Too Many Requests status code, it means you exceeded the allowed request quota.

- When a 429 response is received, the code checks Zendesk’s Retry-After header to decide how long to wait before retrying

- The number of retries is limited to avoid indefinite waiting. If exceeded, the script stops and reports the failure.

import osimport timeimport csvimport requestsfrom datetime import datetime# Load Zendesk API credentials and subdomain from environment variablesZENDESK_API_TOKEN = os.getenv('ZENDESK_API_TOKEN')ZENDESK_USER_EMAIL = os.getenv('ZENDESK_USER_EMAIL')ZENDESK_SUBDOMAIN = os.getenv('ZENDESK_SUBDOMAIN')# Exit if any required environment variables are missingif not all([ZENDESK_API_TOKEN, ZENDESK_USER_EMAIL, ZENDESK_SUBDOMAIN]):print('Error: Missing required environment variables.')exit(1)# Set up HTTP Basic Authentication with email/token and API tokenAUTH = f'{ZENDESK_USER_EMAIL}/token', ZENDESK_API_TOKEN# Base API endpoint for incremental tickets exportAPI_URL = f"https://{ZENDESK_SUBDOMAIN}.zendesk.com/api/v2/incremental/tickets.json"def iso_to_epoch(iso_str):"""Convert ISO 8601 datetime string to epoch timestamp.Replaces 'Z' with UTC offset to ensure compatibility."""dt = datetime.fromisoformat(iso_str.replace("Z", "+00:00"))return int(dt.timestamp())def fetch_incremental_tickets(start_time, max_retries=5):"""Fetches tickets incrementally starting from start_time.Handles pagination and rate limiting by respecting Retry-After header only."""params = {'start_time': start_time}tickets = []url = API_URLretry_count = 0while url:request_params = params if url == API_URL else Noneresponse = requests.get(url, auth=AUTH, params=request_params)# Handle rate limiting (HTTP 429)if response.status_code == 429:if retry_count >= max_retries:print("Max retry limit reached due to rate limiting.")breakretry_after = int(response.headers.get('Retry-After', 5))print(f"Rate limit exceeded. Retrying in {retry_after} seconds...")time.sleep(retry_after)retry_count += 1continue# Reset retry count after successful requestretry_count = 0response.raise_for_status()data = response.json()tickets.extend(data.get('tickets', []))# Pagination: check if more pages existmeta = data.get('meta', {})if meta.get('has_more'):url = meta.get('next_page')params = Noneelse:url = None# Optional: Pause briefly between requests to ease load (tune or remove as needed)time.sleep(1)return ticketsdef export_tickets_to_csv(tickets, filename='tickets_export.csv'):"""Export the list of tickets to a CSV file with selected fields."""fieldnames = ['id', 'subject', 'description', 'status', 'priority', 'created_at', 'updated_at']with open(filename, mode='w', newline='', encoding='utf-8') as csvfile:writer = csv.DictWriter(csvfile, fieldnames=fieldnames)writer.writeheader()for ticket in tickets:# Write ticket data, removing newlines from descriptionwriter.writerow({'id': ticket.get('id', ''),'subject': ticket.get('subject', ''),'description': ticket.get('description', '').replace('\n', ' ').replace('\r', ' '),'status': ticket.get('status', ''),'priority': ticket.get('priority', ''),'created_at': ticket.get('created_at', ''),'updated_at': ticket.get('updated_at', ''),})print(f"Exported {len(tickets)} tickets to {filename}")def main():# Define start date for incremental fetch, convert to epoch timestampstart_date_iso = "2024-01-01T00:00:00Z"start_epoch = iso_to_epoch(start_date_iso)# Fetch tickets incrementally since the start timetickets = fetch_incremental_tickets(start_epoch)print(f"Total tickets fetched: {len(tickets)}")# Export fetched tickets to a CSV fileexport_tickets_to_csv(tickets)if __name__ == '__main__':main()

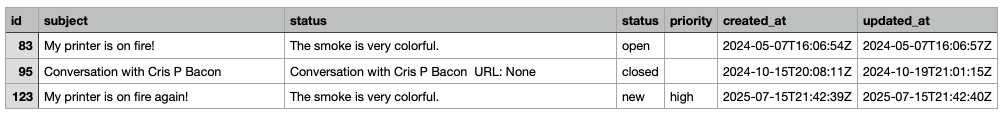

Here's an example output:

How it works

This script:

- Loads Zendesk API credentials and subdomain from environment variables and configures HTTP Basic Authentication with an API token.

- Converts a specified ISO 8601 start date into a Unix epoch timestamp.

- Uses the

fetch_incremental_ticketsfunction to retrieve tickets updated since the start time. This function:- Handles pagination using

next_pageURLs until all ticket pages are retrieved. - Implements rate limit handling by detecting HTTP 429 responses.

- Combines tickets from all pages into a single list.

- Handles pagination using

- Uses

export_tickets_to_csvfunction to write selected fields from the retrieved tickets to a CSV file.

Exporting Zendesk tickets and related user information

In this example, you'll retrieve Zendesk tickets updated since a specified date and include user, group, and organization information before exporting everything to a CSV file. This provides a more detailed view of your tickets by including requester and assignee details, compared to the above example that exported only basic ticket data. Like the example above, this code handles API authentication, and paginates through all results.

import osimport timeimport csvimport requestsfrom datetime import datetimefrom collections import defaultdict# Load Zendesk API credentials and subdomain from environment variablesZENDESK_API_TOKEN = os.getenv('ZENDESK_API_TOKEN')ZENDESK_USER_EMAIL = os.getenv('ZENDESK_USER_EMAIL')ZENDESK_SUBDOMAIN = os.getenv('ZENDESK_SUBDOMAIN')# Check that all required environment variables are present; exit if any are missingif not all([ZENDESK_API_TOKEN, ZENDESK_USER_EMAIL, ZENDESK_SUBDOMAIN]):print('Error: Missing required environment variables.')exit(1)# Set up HTTP Basic Authentication with email/token and API tokenAUTH = f'{ZENDESK_USER_EMAIL}/token', ZENDESK_API_TOKEN# Base URL for all Zendesk API requestsBASE_API_URL = f"https://{ZENDESK_SUBDOMAIN}.zendesk.com/api/v2"def iso_to_epoch(iso_str):"""Convert ISO 8601 datetime string to epoch timestamp.Replace 'Z' with '+00:00' to indicate UTC timezone."""dt = datetime.fromisoformat(iso_str.replace("Z", "+00:00"))return int(dt.timestamp())def fetch_paginated(url, params=None, max_retries=5):"""Fetch all pages of results from a paginated Zendesk API endpoint,handling rate limiting by waiting the Retry-After header only (no exponential backoff)."""results = []retry_count = 0while url:# Send params only on the first request, clear afterward to avoid duplicatesrequest_params = params if retry_count == 0 else Noneresponse = requests.get(url, auth=AUTH, params=request_params)if response.status_code == 429:# Rate limited. Wait per Retry-After header or 5s if missing/malformedif retry_count >= max_retries:print("Max retry limit reached due to rate limiting.")breakretry_after = response.headers.get('Retry-After')wait_time = int(retry_after) if retry_after and retry_after.isdigit() else 5print(f"Rate limited. Sleeping for {wait_time} seconds before retrying...")time.sleep(wait_time)retry_count += 1continue # Retry current request# Reset retries on successful responseretry_count = 0response.raise_for_status()data = response.json()results.append(data)# Extract next page URL or end paginationmeta = data.get('meta', {})url = meta.get('next_page')params = None # Clear parameters to avoid duplicate requestsreturn resultsdef fetch_incremental_tickets(start_time):"""Fetch tickets incrementally since the given start_time."""url = f"{BASE_API_URL}/incremental/tickets.json"params = {"start_time": start_time, "include": "deleted"}all_pages = fetch_paginated(url, params)tickets = []for page in all_pages:tickets.extend(page.get("tickets", []))print(f"Fetched {len(tickets)} tickets")return ticketsdef fetch_users(user_ids):"""Fetch user details for given user ids.Returns a dictionary mapping user id to user data."""users = {}if not user_ids:return userschunk_size = 100 # API limit for batch user fetchids_list = list(user_ids)for i in range(0, len(ids_list), chunk_size):chunk = ids_list[i : i + chunk_size]ids_param = ",".join(str(uid) for uid in chunk)url = f"{BASE_API_URL}/users/show_many.json"params = {"ids": ids_param}response = requests.get(url, auth=AUTH, params=params)response.raise_for_status()# Parse returned user list and map ids to user objectsfor user in response.json().get("users", []):users[user["id"]] = userreturn usersdef fetch_groups(group_ids):"""Fetch group details individually for each group id.Returns a dictionary mapping group id to group data."""groups = {}if not group_ids:return groups# Fetch groups individuallyfor group_id in group_ids:url = f"{BASE_API_URL}/groups/{group_id}.json"response = requests.get(url, auth=AUTH)if response.status_code == 200:group = response.json().get("group")if group:groups[group_id] = groupreturn groupsdef fetch_organizations(org_ids):"""Fetch organization details in batches for given org ids.Returns a dict mapping org id to organization data."""orgs = {}if not org_ids:return orgschunk_size = 100 # API batch limitids_list = list(org_ids)for i in range(0, len(ids_list), chunk_size):chunk = ids_list[i : i + chunk_size]ids_param = ",".join(str(org_id) for org_id in chunk)url = f"{BASE_API_URL}/organizations/show_many.json"params = {"ids": ids_param}response = requests.get(url, auth=AUTH, params=params)response.raise_for_status()# Parse organizations and map by idfor org in response.json().get("organizations", []):orgs[org["id"]] = orgreturn orgsdef extract_ticket_data(ticket, users, groups, orgs):"""Assemble a dictionary with key properties for a ticket including related entities.Suitable for CSV writing."""requester_id = ticket.get("requester_id")submitter_id = ticket.get("submitter_id")assignee_id = ticket.get("assignee_id")group_id = ticket.get("group_id")org_id = ticket.get("organization_id")requester = users.get(requester_id, {})submitter = users.get(submitter_id, {})assignee = users.get(assignee_id, {})group = groups.get(group_id, {})organization = orgs.get(org_id, {})requester_email = requester.get("email", "") or ""requester_domain = requester_email.split("@")[1] if "@" in requester_email else ""submitter_name = submitter.get("name") or requester.get("name", "")ticket_url = f"https://{ZENDESK_SUBDOMAIN}.zendesk.com/agent/tickets/{ticket.get('id', '')}"return {"ID": ticket.get("id", ""),"Requester": requester.get("name", ""),"Requester id": requester_id or "","Requester external id": requester.get("external_id", ""),"Requester email": requester_email,"Requester domain": requester_domain,"Submitter": submitter_name,"Assignee": assignee.get("name", ""),"Assignee id": assignee_id or "","Group": group.get("name", ""),"Subject": ticket.get("subject", ""),"Tags": ",".join(ticket.get("tags", [])),"Status": ticket.get("status", ""),"Priority": ticket.get("priority", ""),"Via": ticket.get("via", {}).get("channel", ""),"Created at": ticket.get("created_at", ""),"Updated at": ticket.get("updated_at", ""),"Organization": organization.get("name", ""),"Ticket URL": ticket_url,}def export_tickets_to_csv(tickets, users, groups, orgs, filename="tickets_export.csv"):"""Write tickets and related record data to a CSV file with selected fields."""fieldnames = ["ID","Requester","Requester id","Requester external id","Requester email","Requester domain","Submitter","Assignee","Assignee id","Group","Subject","Tags","Status","Priority","Via","Created at","Updated at","Organization","Ticket URL",]with open(filename, "w", newline="", encoding="utf-8") as csvfile:writer = csv.DictWriter(csvfile, fieldnames=fieldnames)writer.writeheader()for ticket in tickets:row = extract_ticket_data(ticket, users, groups, orgs)writer.writerow(row)print(f"Exported {len(tickets)} tickets to {filename}")def main():start_date_iso = "2024-01-01T00:00:00Z"start_epoch = iso_to_epoch(start_date_iso)tickets = fetch_incremental_tickets(start_epoch)print(f"Total tickets fetched: {len(tickets)}")if not tickets:print("No tickets found.")return# Collect unique ids for users, groups, organizations referenced by ticketsrequester_ids = {t.get("requester_id") for t in tickets if t.get("requester_id")}submitter_ids = {t.get("submitter_id") for t in tickets if t.get("submitter_id")}assignee_ids = {t.get("assignee_id") for t in tickets if t.get("assignee_id")}group_ids = {t.get("group_id") for t in tickets if t.get("group_id")}org_ids = {t.get("organization_id") for t in tickets if t.get("organization_id")}# Fetch details for related entitiesusers = fetch_users(requester_ids | submitter_ids | assignee_ids)groups = fetch_groups(group_ids)orgs = fetch_organizations(org_ids)# Export all data to CSVexport_tickets_to_csv(tickets, users, groups, orgs)if __name__ == "__main__":main()

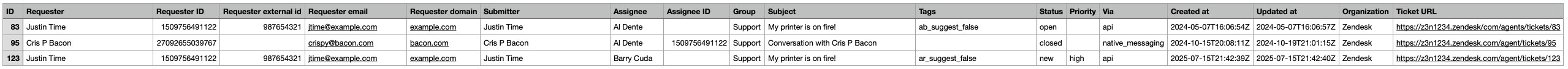

View full size

View full size

How it works

This script:

-

Reads Zendesk API credentials and subdomain from environment variables and configures HTTP Basic Authentication with an API token to securely access the API.

-

Converts an ISO 8601 date string to a Unix epoch timestamp and queries tickets updated since that time.

-

Calls the

fetch_incremental_ticketsfunction to- Retrieve tickets created or updated since the provided

start_timetimestamp. - Combine tickets from every page into a single comprehensive list.

- Print the total number of tickets fetched and return the full aggregated list.

- Retrieve tickets created or updated since the provided

-

Calls the

fetch_usersfunction to- Retrieve detailed user information such as names, email addresses, and external ids.

- Fetch users in batches for efficiency and maps each user’s id to their corresponding data object.

-

Calls the

fetch_groupsfunction to retrieve details about ticket groups like group names, which provide organizational context about ticket assignments. -

Calls the

fetch_organizationsfunction to get organization data linked to tickets, such as company names and related metadata. -

Calls the

extract_ticket_datafunction to- Consolidate key information for each ticket by combining ticket fields with related user, group, and organization details.

- Format this data into a structured dictionary prepared for export or reporting.

-

Calls the

export_tickets_to_csvfunction to write the selected ticket and related fields into a CSV file.

Retrieving unique ids

When you retrieve tickets from Zendesk, each ticket includes references to related records such as users (requesters, submitters, assignees), groups, and organizations, typically identified by unique ids. Since multiple tickets often reference the same records, the above code minimizes API calls by gathering only unique ids for each record type and then fetches in bulk their information.

This snippet collects distinct ids for each record type from the entire list of tickets:

requester_ids = {t.get("requester_id") for t in tickets if t.get("requester_id")}submitter_ids = {t.get("submitter_id") for t in tickets if t.get("submitter_id")}assignee_ids = {t.get("assignee_id") for t in tickets if t.get("assignee_id")}group_ids = {t.get("group_id") for t in tickets if t.get("group_id")}org_ids = {t.get("organization_id") for t in tickets if t.get("organization_id")}

- For each ticket

tin theticketslist, it extracts the id for the specific record type. For example, the requester's id throught.get("requester_id"). - The conditional

if t.get(...)filters out any tickets where that id might be missing or none. - The set

{ ... }ensures that the collected ids are unique and not duplicates.

Retrieving related records

After collecting the unique ids, the script retrieves detailed information for those entities:

users = fetch_users(requester_ids | submitter_ids | assignee_ids)groups = fetch_groups(group_ids)orgs = fetch_organizations(org_ids)

- The sets of requester, submitter, and assignee ids are combined so that all relevant user ids are included in a single call.

fetch_users()makes API requests to retrieve user profiles, such as name, email, and external ids, for all collected user ids.fetch_groups()retrieves information about each group, like its name.fetch_organizations()retrieves information about organizations associated with the tickets, such as company names.